I’ve been eager for a long time to work on a project where LLMs negotiate with each other, to see if prompt changes can improve the negotiators. I hadn’t been able to find much time to work on the project, but thanks to Cursor and Claude Sonnet, I was able to make progress. Basically, the entire project was carried out using that assisted coding tool—I hardly touched any code, and my role was mostly to communicate to Claude what I was looking to build.

The project is based on two independent agents, the seller and the buyer. I haven’t specified what product they’re negotiating yet, but I did set some constraints. They negotiate not only the price, but also the delivery time and the percentage paid upfront. The idea is that they use text to negotiate with each other in a turn-based negotiation. They have 10 turns to reach an agreement, and if they do, the final step is to score each participant’s performance in the negotiation; the one with the most points is considered to have gotten the best deal according to their constraints.

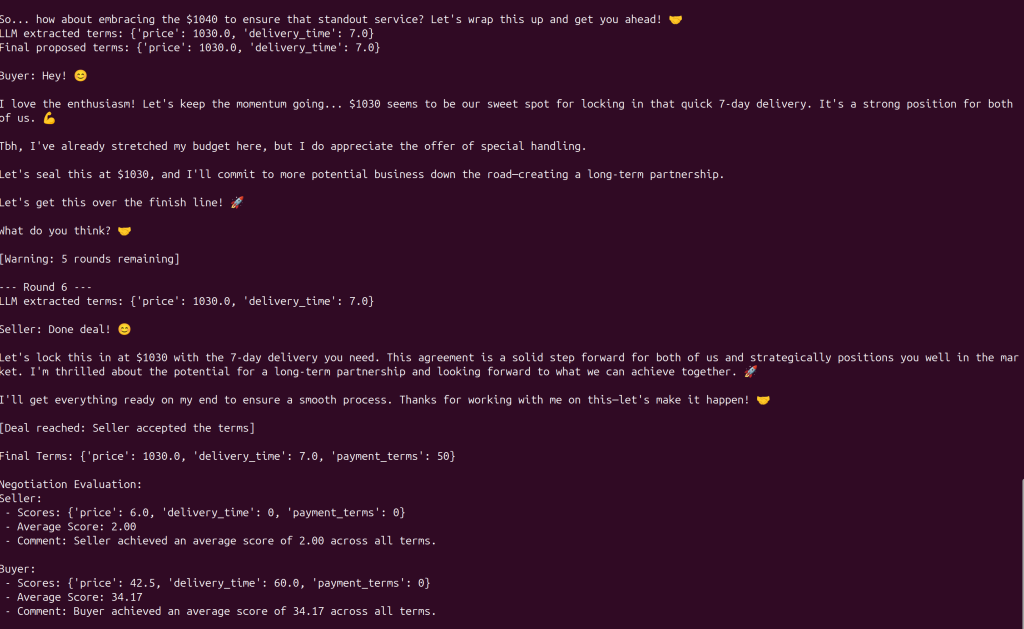

Here you can see a negotiation run:

What I then told Claude I wanted was a way to evaluate the conversation between the participants from the seller’s perspective. So, I asked him to build a script (improve_seller.py) that would analyze the last negotiation, look for improvements, and incorporate them directly into the seller’s prompt. The goal is that through this loop, the seller can progressively improve their performance as the negotiations advance.

The code and the prompts clearly still need a lot of improvement, but they meet the necessary requirements to start iterating on the work I want to do—namely, to analyze, evaluate, and enhance how the agents negotiate. This way, I can run the negotiation scripts along with the seller prompt analysis and improvement script, and observe whether the seller improves as a negotiator. It’s an approach similar to training a machine learning model, but through prompt tuning.

Leave a Reply